Signals of the autonomic nervous system (ANS) can be used to discriminate between discrete categories of emotions. To date, the most successful emotion classification algorithms have been user-dependent (e.g. trained and tested on data from the same user). While user-independent classification systems have been attempted, their accuracy has been much lower. Emotion recognition systems for individuals with minimally communicative ability rely on the ability to build an accurate user-independent system, as these individuals are unable to provide a “ground truth” label that is necessary to train a classifier. The success of this endeavour depends on the development of a large database of emotion-related physiological data.

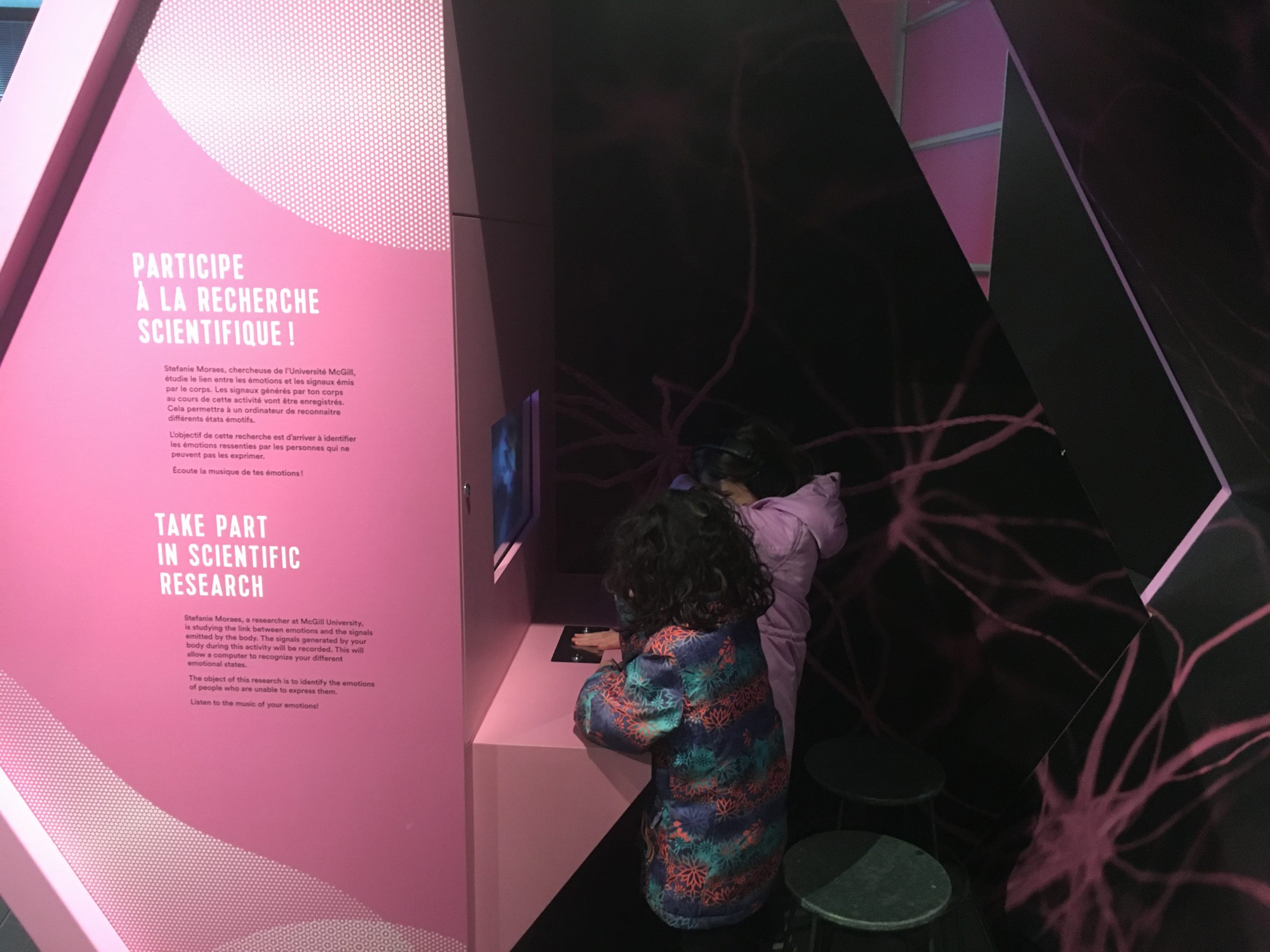

Mes émotions sont à fleur de peau is an interactive exhibit at the Montreal Science Center designed to address this problem. Visitors to the exhibit view a series of emotion-inducing videos while three physiological signals are recorded. At the end of each video, visitors are asked to report the emotion that they are experiencing. During this time, the biomusic software in the exhibit sonifies the visitor’s physiological signals so that they can hear the music their body generates when they experience different emotions. The visitors are then re-presented with the videos, with biomusic replacing the original video soundtrack.

To date, Mes émotions sont à fleur de peau has collected usable data from over 20,000 visitors. The BIAPT lab is currently working on developing an emotion recognition algorithm from this dataset that will eventually be implemented in biomusic.

This project was funded by a Mitacs Accelerate grant.